Other accounts:

All of my comments are licensed under the following license

- 28 Posts

- 147 Comments

182·11 days ago

182·11 days agoGot this from the lemmy scripts community:

91·14 days ago

91·14 days agodbzer0 is anarchist though.

21·14 days ago

21·14 days agoThe point is to pick out the users that only like to pick fights or start trouble, and don’t have a lot that they do other than that, which is a significant number. You can see some of them in these comments.

Ok then that makes sense on why you chose these specific mechanics for how it works. Does that mean hostile but popular comments in the wrong communities would have a pass though?

For example let’s assume that most people on Lemmy love cars (probably not the case but lets go with it) and there are a few commenters that consistently shows up in the !fuck_cars@lemmy.ml or !fuckcars@lemmy.world community to show why everyone in that community is wrong. Or vice a versa

Since most people scroll all it could be the case that those comments get elevated and comments from people that community is supposed to be for get downvoted.

I mean its not that much of a deal now because most values are shared across Lemmy but I can already see that starting to shift a bit.

I was reminded of this meme a bit

Initially, I was looking at the bot as its own entity with its own opinions, but I realized that it’s not doing anything more than detecting the will of the community with as good a fidelity as I can achieve.

Yeah that’s the main benefit I see that would come from this bot. Especially if it is just given in the form of suggestions, it is still human judgements that are making most of the judgement calls, and the way it makes decisions are transparent (like the appeal community you suggested).

I still think that instead of the bot considering all of Lemmy as one community it would be better if moderators can provide focus for it because there are differences in values between instances and communities that I think should reflect in the moderation decisions that are taken.

However if you aren’t planning on developing that side of it more I think you could probably still let the other moderators that want to test the bot see notifications from it anytime it has a suggestion for a community user ban (edit: for clarification) as a test run. Good luck.

31·15 days ago

31·15 days agoBut in general, one reason I really like the idea is that it’s getting away from one individual making decisions about what is and isn’t toxic and outsourcing it more to the community at large and how they feel about it, which feels more fair.

Yeah that does sound useful it is just that there are some communities where it isn’t necessarily clear who is a jerk and who has a controversial minority opinion. For example how do you think the bot would’ve handled the vegan community debacle that happened. There were a lot of trusted users who were not necessarily on the side of vegans and it could’ve made those communities revert back to a norm of what users think to be good and bad.

I think giving people some insight into how it works, and ability to play with the settings, so to speak, so they feel confident that it’s on their side instead of being a black box, is a really good idea. I tried some things along those lines, but I didn’t get very far along.

If you’d want I can help with that. Like you said it sounds like a good way of decentralizing moderation so that we have less problems with power tripping moderators and more transparent decisions. I just want it so that communities can keep their specific values while easing their moderation burden.

91·15 days ago

91·15 days agoIs there a way of tailoring the moderation to a communities needs? One problem that I can see arising is that it could lead to a mono culture of moderation practices. If there is a way of making the auto reports relative that would be interesting.

2·17 days ago

2·17 days agoMaybe we should look for ways of tracking coordinated behaviour. Like a definition I’ve heard for social media propaganda is “coordinated inauthentic behaviour” and while I don’t think it’s possible to determine if a user is being authentic or not, it should be possible to see if there is consistent behaviour between different kind of users and what they are coordinating on.

Edit: Because all bots do have purpose eventually and that should be visible.

Edit2: Eww realized the term came from Meta. If someone has a better term I will use that instead.

2·18 days ago

2·18 days agoKagi doesn’t really have its own index either. It mainly relies on other search engines as well and the indexes that are its own that focus on small web stuff is better done by marginalia.nu which is also open source.

21·18 days ago

21·18 days agoIt is a meta-search engine so it takes results from other search engines and shows the results. Usually you can decide which search engines to use in preferences. You can host it yourself or find an online instance to use.

91·18 days ago

91·18 days agoMost searxng instances have a similar lens for lemmy comments so you can do that too if you want an open source alternative.

810·18 days ago

810·18 days agoProbably but which instance has over 70,000 users?

1·1 month ago

1·1 month agosuch that your model could be “riding along on a human surfboard with human guidance”

Sorry I don’t really understand what you’re saying here.

1·1 month ago

1·1 month agoGood point. I have been a lot more active in tailoring my experience here compared to other social media. I wish there was more tools for deciding whether or not you want to block someone though. Sometimes its not as simple as just looking at their post history. Also as an aside I wish it was possible to block votes as well so the ranking of the content was also able to be personalized.

5·1 month ago

5·1 month agoI’m going to be bold enough to say we don’t have as wide of an AI/LLM issue on the Fediverse as the other platforms will have.

Why do you think that? I don’t think that there is anything systemic in how the fediverse operates that will stop LLMs polluting the discourse here too. Actually I already think that they are polluting the discourse here.

26·1 month ago

26·1 month agoThat sucks. So much research is being twisted by humanity’s greed. I hope that whatever comes after the internet becomes useless is better.

22·1 month ago

22·1 month agoLong distances actually don’t really mean much it can’t be guaranteed that they actually correlate to much. It is mostly the local groups that are conserved and a bit of the global structure.

42·2 months ago

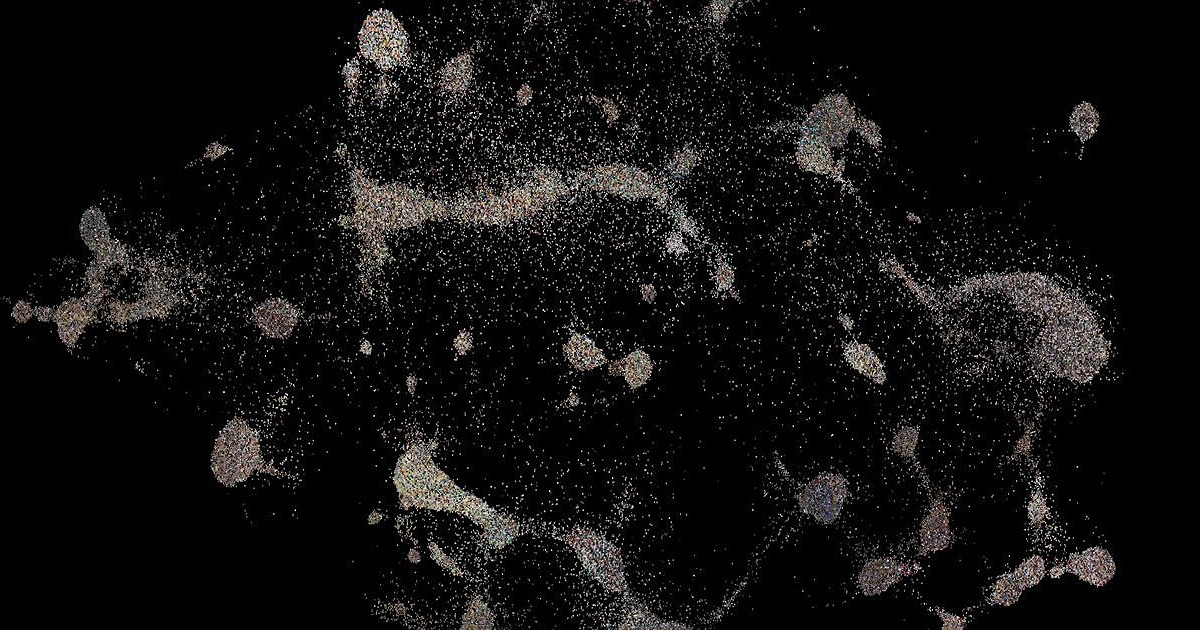

42·2 months agoI had to try scraping the websites multiple times because of stupid bugs I put in the code beforehand, so I might of put more strain on the instances than I meant too. If I did this again it would hopefully be much less tolling on the servers.

As for the cost of scraping it actually isn’t that hard I just had it running in the background most of the time.

The original data had 21,000+ features. I used an algorithm to reduce the dimensions to 2 but keep a similar structure (so similar communities are close dissimilar communities are far away).

So the axes don’t really mean anything in particular.

Probably a webgl problem. I had to use ungoogled chromium to open the page. I think it works on regular firefox too.

72·2 months ago

72·2 months agoYeah that sounds like a good idea so you can see how connected local communities are. Probably makes more sense to use original dimensions so no extra information is lost.

It shows me 93 comments and 2 posts for me. It probably just hasn’t federated to your instance yet.

Anti Commercial-AI license (CC BY-NC-SA 4.0)